Routers: Control Plane protections and their implications

Table of Contents

While working on the upcoming hands-on MTU tutorial using Linux network namespaces (fragmentation, PMTUD, etc.), I thought some readers would appreciate a short detour into why certain ICMP packets can get filtered or heavily rate-limited by modern routers.

Modern switches and routers are engineering marvels. With highly sophisticated hardware, they are capable of forwarding insane amounts of traffic.

In this post, we will explore how network devices protect their “brains”, the Control Plane, from Denial of Service (DoS) attacks, and how these mechanisms have subtle effects on the user traffic they forward.

But before that, let’s start with a short high-level overview of some typical switch/ router hardware architectures, to better understand these mechanisms.

The need for speed #

To put things into perspective, at the time of writing, a fully maxed out Cisco NCS 6000 series can forward up to 256 Tbps. A more “common” Cisco ASR 9000 Series is able to route up to 160 Tbps on 400G and 800G interfaces.

Similarly, a single 2U Celestica DS6001 datacenter spine switch with L3 routing capabilities, powered by the latest Broadcom Tomahawk 6, can forward up to 102.4 Tbps (with a much smaller FIB size, though).

Fast path vs slow path #

To achieve these impressive figures, switches and routers are architected with a functional split between:

- The Forwarding Plane, Data Plane or data path, in charge of forwarding packets at full speed between ports. It’s usually made of dedicated hardware such as Application-Specific Integrated Circuit (ASICs) and Network Processing Units (NPUs), which do the heavy lifting of the packet forwarding. This is also known as the fast path.

- The Control Plane, in charge of determining how packets should be forwarded in the fast path. This is where routing protocols, L2 neighbouring protocols like ARP and NDP, etc. run. The Control Plane is also known as the slow path, as exceptional packets are brought there from the dataplane.

- The Management Plane, in charge of configuring the Control Plane. This is where things like the SSH daemon, the Command Line Interface (CLI), NETCONF or monitoring protocols such as SNMP live.

The Control and Management Planes typically run in the same shared embedded host board. In modular or chassis routers, these modules are referred to as Supervisor cards (Cisco), Routing Engines (REs, Juniper) or Main Processing Units (MPUs, Huawei), to name a few.

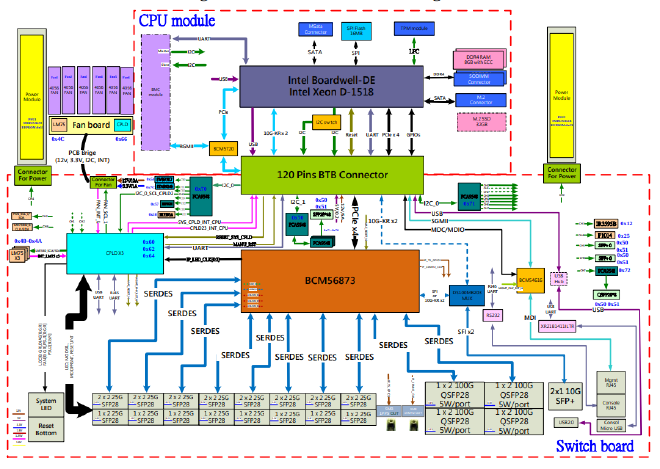

The diagram below shows a very simplified representation of two typical switch/router1 architectures, with their Control Plane (turquoise) and Forwarding Plane (red) components:

Feeding the Control Plane #

Control plane’s routing process/es like IS-IS, OSPF, BGP, L2 neighbour discovery protocols like ARP and NDP, and other protocols like STP/xSTP, LACP, LLDP, NTP etc. send and receive packets to exchange state with other devices 2.

This is achieved by using special channels (e.g. PCIe) or ports between the Control Plane and the Forwarding plane. In the diagram above, you can see that connection between the forwarding ASIC/NPUs and the host CPU (left). In chassis routers (right), CPU modules are directly plugged to the backplane/midplane or switching fabric, which is a very fast bus interconnecting the different cards.

It’s important to note that, in many cases, Control Plane traffic is forwarded over the same ports as the user traffic, so the traffic it’s responsible for routing or transporting:

This ensures dataplane failures due to misconfigurations, hardware issues etc. are always reflected in the routing decisions (topological congruence).

The packet exception path #

Optimizing for speed always comes at a cost… in the case of network equipment, twice; 🤑 and versatility.

Networking hardware is highly optimized for common operations such as VLAN and tunnel encapsulation/decapsulation, MPLS label stack manipulation, IP lookup and forwarding, ACL processing etc., which is the bulk of the traffic. However, it cannot always handle exceptional conditions directly in hardware3:

- IP/MPLS TTL exceeded, which require to generate ICMP messages and might want to be logged.

- MTU exceeded, which might require to either fragment packets4 (

DF=0) or generate ICMP messages(DF=1), and might want to be logged. - Corrupted (e.g. checksums) or invalid frames, which may indicate misconfigurations with encapsulations, MTU issues, hardware failures etc. and that might want to be logged.

- RPF or split horizon violations, which may indicate routing inconsistencies, misconfigurations etc. and that might want to be logged.

- Invalid VLANs, L2 or L3 lookup misses, Martian addresses, which might also want to be logged.

- …

The Control Plane, therefore, also receives exception packets that were not originally destined for the router itself. And this is important, because capacity for the Control Plane to process packets is much much lower than the forwarding plane.

In fact, any traffic directed or diverted to the Control Plane, whether intentional or not, is a potential Denial-of-Service (DoS) vector for the Control Plane, and must be handled with care.

Real world hardware architectures #

Edgecore DCS203/AS7326-56X #

The Open Compute Project has released a variety of network switch specifications over the years, contributed by multiple vendors, and covering different roles in the network.

One example is EdgeCore’s DCS203 (AS7326-56X)[1], a Datacenter Top-Of-the-Rack switch (TOR) with L3 capabilities, whose hardware specification is described in this document:

Credit: The OpenCompute Project, EdgeCore, Broadcom, etc. OCP-CLA license.

In the diagram above, the lower part is the Switch Board or Forwarding Plane.

It contains the front ports (SFP28/QSFP28, two SFP+), the management and console

RJ45 ports and the main forwarding ASIC, a Broadcom Trident3 ASIC (BCM56873).

The CPU module is essentially a small host with its CPU, RAM and storage etc.

and it’s the element that runs the Control and Management Plane software.

These two parts are connected via the PCIe bus (in black PCIe x4):

The BCM56873 is connected to CPU module via PCIe Gen2.0 x 4 bus

which gives a theoretical maximum BW of ~16 Gbps half-duplex (4 x 500 MB/s), far far lower than the maximum switching capacity of the BCM56873, which is 3.2 Tbps.

Cisco Catalyst 9500 with Silicon One #

You can check the Cisco Catalyst 9500 white paper, which offers great insights on its architecture and the use of Cisco’s Silicon One. Don’t miss the interesting packet walks.

Protecting the Control Plane 🛡️ #

Control plane failures cannot only bring down the device, but in some cases may trigger cascading effects on adjacent routers, which can result in painful outages or even Internet-level outages (🔥🔥).

The Control Plane must have guaranteed CPU time, memory, and some guarantee that Control Plane traffic will be prioritized in the dataplane over user traffic, and in the Forwarding Plane - Control Plane interface.

Filtering malicious traffic targeting the router #

The first line of defense is obvious; protect the router from any malicious or unintended traffic. It’s worth noting that user traffic is never brought to the CPU unless:

- It’s directed to the router itself.

- It’s an exception packet.

Exception packets are - or shall I say, there is no way to distinguish them from - legitimate packets. Like in any other DoS attack, it’s the volume that makes them dangerous for the Control Plane.

Access Control Lists(ACL) or Firewall rules are usually set at the ASIC level and at the CPU level to make sure only intended traffic targeting the router itself makes it to the CPU.

QoS and rate-limiting traffic to the CPU #

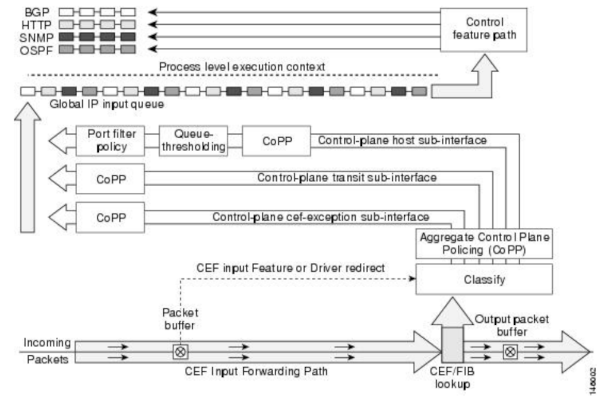

Line-cards and ASICs implement a strict Quality of Service (QoS) prioritization and rate limiting to traffic directed or punted to the CPU.

Traffic is classified 5 (Ingress) into different QoS classes, and classes mapped to different hardware queues, so that scheduling or priority can be enforced. You can see an example of this process from the Cisco IOS Control Plane Protection documentation:

Copyright: Cisco Systems.

Non-essential traffic, e.g. exception packets, are generally heavily rate-limited at the hardware level (at least) using Policers. Control Plane traffic is generally also policed but with higher limits, to avoid starving lower classes due to unintentional DoSs. Traffic generated from the CPU (Egress) is sometimes also classified, prioritized and policed.

These configurations need to be tweaked depending on the role of the device in the network.

How network vendors implement protections #

Check these references:

- Cisco: Cisco IOS Control Plane Policing, IOS Control Plane Protection

- Juniper: JunOS Control Plane DDoS protection, JunOS ICMP feature.

Unconditionally dropping unnecessary traffic (e.g. ICMP) #

In some cases, network operators take a much harsher approach, and drop non-strictly necessary traffic going to the CPU. This reduces the DoS attack surface on their core infrastructure.

A good example is ICMP. ICMP has historically been used as a DoS vector against IP routers, with attacks like ICMP flooding and ICMP smurf attacks, etc. ICMP is also used to perform (unauthorised) network reconnaissance 🧐.

The effect on user traffic #

The impact of these protective measures on user traffic is subtle. End hosts typically don’t communicate directly with intermediate routers, and their traffic only traverses the fast path.

There are two main exceptions to that, though:

Path MTU Discovery (PMTUD) requires intermediate routers to generate ICMP messages back to the originator in the event of an MTU violation.

Due to the reasons explained above - and some others which will be covered in the MTU tutorial - PMTUD is mostly unreliable / broken, and should be avoided, unless you are in control of the entire End-2-End path.

Tools like

traceroute/tracert, which also rely on ICMP messages being generated (TTL=0) by intermediate routers, might not be reliable either.

So:

Next #

This was supposed to be a “quick” post… 🙄.

If there is enough interest, in Part2 I will dig into the SONiC project codebase6, to see how ASICs and NPUs get initialized and configured to protect the Control Plane.

Cheers 🍺!

switches with L3 support, routers with L2 switching capabilities.. lines are blurry, indeeed. Broadly speaking, both types of devices can do similar things nowadays, but they are more optimized for different roles (e.g. ToR switch/router vs a core IP router). ↩︎

at the end of the day, they are just OS processes doing IPC and exchanging state (like routing information) with processes on another network devices. ↩︎

this is very hardware specific, and some of this information is either not publicly available (under strict NDA) or not obvious in the guides. ↩︎

yes, many devices are unable to fragment in hardware, and some other high end systems have a limited capacity for doing that in the dataplane. ↩︎

this is why it’s vital to not trust DSCP/TOS bits and reclassify traffic when ingressing your network, as an attacker could forge packets marking them as NC. ↩︎

and some other related “open-source” projects. ↩︎